by R.I. Pienaar | Jun 29, 2011 | Uncategorized

There has been many discussions about Facter 2, one of the things I looked forward to getting was the ability to read arbitrary files or run arbitrary scripts in a directory to create facts.

This is pretty important as a lot of the typical users just aren’t Ruby coders and really all they want is to trivially add some facts. All too often the answer to common questions in the Puppet user groups ends up being “add a fact” but when they look at adding facts its just way too daunting.

Sadly as of today Facter 2 is mostly vaporware so I created a quick – really quick – fact that reads the directory /etc/facts.d and parse Text, JSON or YAML files but can also run any executable in there.

To write a fact in a shell script that reads /etc/sysconfig/kernel on a RedHat machine and names the default kernel package name simply do this:

#!/bin/sh

source /etc/sysconfig/kernel

echo "default_kernel=${DEFAULTKERNEL}" |

#!/bin/sh

source /etc/sysconfig/kernel

echo "default_kernel=${DEFAULTKERNEL}"

Add it to /etc/facts.d and make it executable, now you can simple use your new fact:

% facter default_kernel

kernel-xen |

% facter default_kernel

kernel-xen

Simple stuff. You can get the fact on my GitHub and deploy it using the standard method.

by R.I. Pienaar | Jun 11, 2011 | Uncategorized

Note: This project is now being managed by Puppetlabs, its new home is http://projects.puppetlabs.com/projects/hiera

Last week I posted the first details about my new data source for Hiera that enables its use in Puppet.

In that post I mentioned I want to do merge or array searches in the future. I took a first stab at that for array data and wanted to show how that works. I also mentioned I wanted to write a Hiera External Node Classifier (ENC) but this work completely makes that redundant now in my mind.

A common pattern you see in ENCs are that they layer data – very similar in how extlookup / hiera has done it – but that instead of just doing a first-match search they combine the results into a merged list. This merged list is then used to include the classes on the nodes.

For a node in the production environment located in dc1 you’ll want:

node default {

include users::common

include users::production

include users::dc1

} |

node default {

include users::common

include users::production

include users::dc1

}

I’ve made this trivial in Hiera now, given the 3 files below:

common.json

{"classes":"users::common"}

production.json

{"classes":"users::production"}

dc1.json

{"classes":"users::dc1"}

And appropriate Hiera hierarchy configuration you can achieve this using the node block below:

node default {

hiera_include("classes")

} |

node default {

hiera_include("classes")

}

Any parametrized classes that use Hiera as in my previous post will simply do the right thing. Individual classes variables can be arrays so you can include many classes at each tier. Now just add a role fact on your machines, add a role tier in Hiera and you’re all set.

The huge win here is that you do not need to do any stupid hacks like load the facts from the Puppet Masters vardir in your ENC to access the node facts or any of the other hacky things people do in ENCs. This is simply a manifest doing what manifests do – just better.

The hiera CLI tool has been updated with array support, here is it running on the data above:

$ hiera -a classes

["users::common"]

$ hiera -a classes environment=production location=dc1

["users::common", "users::production", "users::dc1"]

I’ve also added a hiera_array() function that takes the same parameters as the hiera() function but that returns an array of the found data. The array capability will be in Hiera version 0.2.0 which should be out later today.

I should also mention that Luke Kanies took a quick stab at integrating Hiera into Puppet and the result is pretty awesome. Given the example below Puppet will magically use Hiera if it’s available, else fall back to old behavior.

class ntp::config($ntpservers="1.pool.ntp.org") {

.

.

}

node default {

include ntp::config

} |

class ntp::config($ntpservers="1.pool.ntp.org") {

.

.

}

node default {

include ntp::config

}

With Lukes proposed changes this would be equivalent to:

class ntp::config($ntpservers=hiera("ntpservers", "1.pool.ntp.org")) {

.

.

} |

class ntp::config($ntpservers=hiera("ntpservers", "1.pool.ntp.org")) {

.

.

}

This is pretty awesome. I wouldn’t hold my breath to see this kind of flexibility soon in Puppet core but it shows whats possible.

by R.I. Pienaar | Jun 6, 2011 | Uncategorized

Note: This project is now being managed by Puppetlabs, its new home is http://projects.puppetlabs.com/projects/hiera

Yesterday I released a Hierarchical datastore called Hiera, today as promised I’ll show how it integrates with Puppet.

Extlookup has solved the basic problem of loading data into Puppet. This was done 3 years ago at a time before Puppet supported complex data in Hashes or things like Parametrized classes. Now as Puppet has improved a new solution is needed. I believe the combination of Hiera and the Puppet plugin goes a very long way to solving this and making parametrized classes much more bearable.

I will highlight a sample use case where a module author places a module on the Puppet Forge and a module user downloads and use the module. Both actors need to create data – the author needs default data to create a just-works experience and the module user wants to configure the module behavior either in YAML, JSON, Puppet files or anything else he can code.

Module Author

The most basic NTP module can be seen below. It has a ntp::config class that uses Hiera to read default data from ntp::data:

modules/ntp/manifests/config.pp

class ntp::config($ntpservers = hiera("ntpservers")) {

file{"/tmp/ntp.conf":

content => template("ntp/ntp.conf.erb")

}

} |

class ntp::config($ntpservers = hiera("ntpservers")) {

file{"/tmp/ntp.conf":

content => template("ntp/ntp.conf.erb")

}

}

modules/ntp/manifests/data.pp

class ntp::data {

$ntpservers = ["1.pool.ntp.org", "2.pool.ntp.org"]

} |

class ntp::data {

$ntpservers = ["1.pool.ntp.org", "2.pool.ntp.org"]

}

This is your most basic NTP module. By using hiera(“ntpserver”) you load $ntpserver from these variables, the first one that exists gets used. In this case the last one.

- $ntp::config::data::ntpservers

- $ntp::data:ntpservers

This would be an abstract from a forge module, anyone who use it will be configured to use the ntp.org base NTP servers.

Module User

As a user I really want to use this NTP module from the Forge and not write my own. But what I also need is flexibility over what NTP servers I use. Generally that means forking the module and making local edits. Parametrized Classes are supposed to make this better but sadly the design decisions means you need an ENC or a flexible data store. The data store was missing thus far and I really would not recommend their use without it.

Given that the NTP module above is using Hiera as a user I now have various options to override its use. I configure Hiera to use the (default) YAML backend for data but to also load in the Puppet backend should the YAML one not provide an answer. I also configure it to allow me to create per-location data that gives me the flexibility I need to pick NTP servers I need.

:backends: - yaml

- puppet

:hierarchy: - %{location}

- common |

:backends: - yaml

- puppet

:hierarchy: - %{location}

- common

I now need to decide how best to override the data from the NTP module:

I want:

- Per datacenter values when needed. The NOC at the data center can change this data without change control.

- Company wide policy the should apply over the module defaults. This is company policy and should be subject to change control like my manifests.

Given these constraints I think the per-datacenter policy can go into data files that is controlled outside of my VCS like with a web application or simple editor. The common data that should apply company wide need to be controlled under my VCS and managed by the change control board.

Hiera makes this easy. By configuring it as above the new Puppet data search path – for a machine in dc1 – would be:

- $data::dc1::ntpservers – based on the Hiera configuration, user specific

- $data::common::ntpservers – based on the Hiera configuration, user specific

- $ntp::config::data::ntpservers – users do not touch this, it’s for module authors

- $ntp::data:ntpservers – users do not touch this, it’s for module authors

You can see this extends the list seen above, the module author data space remain in use but we now have a layer on top we can use.

First we create the company wide policy file in Puppet manifests:

modules/data/manifests/common.pp

class data::common {

$ntpservers = ["ntp1.example.com", "ntp2.example.com"]

} |

class data::common {

$ntpservers = ["ntp1.example.com", "ntp2.example.com"]

}

As Hiera will query this prior to querying any in-module data this will effectively prevent any downloaded module from supplying NTP servers other than ours. This is a company wide policy that applies to all machines unless specifically configured otherwise. This lives with your code in your SCM.

Next we create the data file for machines with fact $location=dc1. Note this data is created in a YAML file outside of the manifests. You can use JSON or any other Hiera backend so if you had this data in a CMDB in MySQL you could easily query the data from there:

hieradb/dc1.yaml

---

ntpservers: - ntp1.dc1.example.com

- ntp2.dc1.example.com |

---

ntpservers: - ntp1.dc1.example.com

- ntp2.dc1.example.com

And this is the really huge win. You can create Hiera plugins to get this data from anywhere you like that magically override your in-manifest data.

Finally here are a few Puppet node blocks:

node "web1.prod.example.com" {

$location = "dc1"

include ntp::config

}

node "web1.dev.example.com" {

$location = "office"

include ntp::config

}

node "oneoff.example.com" {

class{"ntp::config":

ntpservers => ["ntp1.isp.net"]

}

} |

node "web1.prod.example.com" {

$location = "dc1"

include ntp::config

}

node "web1.dev.example.com" {

$location = "office"

include ntp::config

}

node "oneoff.example.com" {

class{"ntp::config":

ntpservers => ["ntp1.isp.net"]

}

}

These 3 nodes will have different NTP configurations based on their location – you should really make $location a fact:

-

web1.prod will use ntp1.dc1.example.com and ntp2.dc1.example.com

-

web1.dev will use the data from class data::common

-

oneoff.example.com is a complete weird case and you can still use the normal parametrized syntax – in this case Hiera wont be involved at all.

And so we have a very easy to use a natural blend between using param classes from an ENC for full programmatic control without sacrificing the usability for beginner users who can not or do not want to invest the time to create an ENC.

The plugin is available on GitHub as hiera-puppet and you can just install the hiera-puppet gem. You will still need to install the Parser Function into your master until the work I did to make Puppet extendable using RubyGems is merged.

The example above is on GitHub and you can just use that to test the concept and see how it works without the need to plug into your Puppet infrastructure first. See the README.

The Gem includes extlookup2hiera that can convert extlookup CSV files into JSON or YAML.

by R.I. Pienaar | Jun 5, 2011 | Uncategorized

Note: This project is now being managed by Puppetlabs, its new home is http://projects.puppetlabs.com/projects/hiera

In my previous post I presented a new version of extlookup that is pluggable. This is fine but it’s kind of tightly integrated with Puppet and hastily coded. That code works – and people are using it – but I wanted a more mature and properly standalone model.

So I wrote a new standalone non-puppet related data store that takes the main ideas of using Hierarchical data present in extlookup and made it generally available.

I think the best model for representing key data items about infrastructure is using a Hierarchical structure.

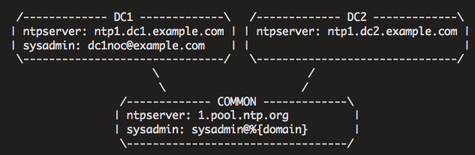

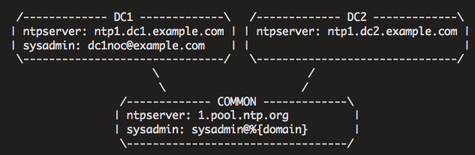

The image above shows the data model visually, in this case we need to know the Systems Administrator contact as well as the NTP servers for all machines.

If we had production machines in dc1, dc2 and our dev/testing in our office this model will give the Production machines specific NTP servers while the rest would use the public NTP infrastructure. DC1 would additional have a specific Systems Admin contact, perhaps it’s outsourced to your DR provider.

This is the model that extlookup exposed to Puppet and that a lot of people are using extensively.

Hiera extracts this into a standalone project and ships with a YAML backend by default, there are also JSON and Puppet ones available.

It extends the old extlookup model in a few key ways. It has configuration files of it’s own rather than rely on Puppet. You can chain multiple data sources together and the data directories are now subject to scope variable substitution.

The chaining of data sources is a fantastic ability that I will detail in a follow up blog post showing how you would use this to create reusable modules and make Puppet parametrized classes usable – even without an ENC.

It’s available as a gem using the simple gem install hiera and the code is on GitHub where there is an extensive README. There is also a companion project that let you use JSON as data store – gem install hiera-json. These are the first Gems I have made in years so no doubt they need some love, feedback appreciated in GitHub issues.

Given the diagram above and data setup to match you can query this data from the CLI, examples of the data is @ GitHub:

$ hiera ntpserver location=dc1

ntp1.dc1.example.com |

$ hiera ntpserver location=dc1

ntp1.dc1.example.com

If you were on your Puppet Master or had your node Fact YAML files handy you can use those to provide the scope, here the yaml file has a location=dc2 fact:

$ hiera ntpserver --yaml /var/lib/puppet/yaml/facts/example.com

ntp1.dc2.example.com |

$ hiera ntpserver --yaml /var/lib/puppet/yaml/facts/example.com

ntp1.dc2.example.com

I have a number of future plans for this:

- Currently you can only do priority based searches. It will also support merge searches where each tier will contribute to the answer. The answer will be a merged hash

- A Puppet ENC should be written based on this data. This will require the merge searches mentioned above.

- More backends

- A webservice that can expose the data to your infrastructure

- Tools to create the data – this should really be Foreman and/or Puppet Dashboard like tools but certainly CLI ones should exist too.

I have written a new Puppet backend and Puppet function that can query this data. This has turned out really great and I will make a blog post dedicated to that later, for now you can see the README for that project for details. This backend lets you override in-module data supplied inside your manifests using external data of your choice. Definitely check it out.

by R.I. Pienaar | May 28, 2011 | Uncategorized

NOTE: This ended up being a proof of concept for a more complete system called Hiera please consider that instead.

Back in 2009 I wrote the first implementation of extlookup for Puppet later on it got merged – after a much needed rewrite – into Puppet mainstream. If you don’t know what extlookup does please go and read that post first.

The hope at the time was that someone would make it better and not just a hacky function that uses global variables for its config. I was exploring some ideas and showing how rich data would apply to the particular use case and language of Puppet but sadly nothing has come of these hopes.

The complaints about extlookup fall into various categories:

- CSV does not make a good data store

- I have a personal hate for the global variable abuse in extlookup, I was hoping Puppet config items will become pluggable at some point, alas.

- Using functions does not let you introspect the data usage inside your modules for UI creation

- Other complaints fall in the ‘Not Invented Here’ category and the ‘TL; DR’ category of people who simply didn’t bother understanding what extlookup does

The complaint about using functions to handle data not being visible to external sources is valid. Puppet has not made introspection of classes and their parameters easy for ENCs yet so this just seems to me like people who don’t understand that extlookup is simply a data model not a prescription for how to use the data. In a follow up post I will show an extlookup based ENC that supports parametrized classes and magical data resolution for those parametrized classes using the exact same extlookup data store and precedence rules.

Not much to be done for the last group of people but as @jordansissel said “haters gonna hate, coders gonna code!”.

I have addressed the first complaint now by making an extlookup that is pluggable so you can bring different backends.

First of course, in bold defiance of the Ruby Way, I made it backward compatible with older versions of extlookup and gave it a 1:1 compatible CSV backend.

I addressed my global variable hate by adding a config file that might live in /etc/puppet/extlookup.yaml.

Status

I wrote this code yesterday afternoon, so you should already guess that there might be bugs and some redesigns ahead and that it will most likely destroy your infrastructure. I will add unit tests to it etc so please keep an eye on it and it will become mature for sure.

I have currently done backends for CSV, YAML and Puppet manifests. A JSON one will follow and later perhaps one querying Foreman and other data stores like that.

The code lives at https://github.com/ripienaar/puppet-extlookup.

Basic Configuration

Configuration of precedence is a setting that applies equally to all backends, the config file should live in the same directory as your puppet.conf and should be called extlookup.yaml. Examples of it below.

CSV Backend

The CSV backend is backward compatible, it will also respect your old style global variables for configuration – but the other backends wont. To configure it simply put something like this in your config file:

---

:parser: CSV

:precedence:

- environment_%{environment}

- common

:csv:

:datadir: /etc/puppet/extdata |

---

:parser: CSV

:precedence:

- environment_%{environment}

- common

:csv:

:datadir: /etc/puppet/extdata

YAML Backend

The most common proposed alternatives to extlookup seem to be YAML based. The various implementations out there though are pretty weak and seemed to get bored with the idea before reaching feature parity with extlookup. With a plugable backend it was easy enough for me to create a YAML data store that has all the extlookup features.

In the case of simple strings being returned I have kept the extlookup feature that parses variables like %{country} in the result data out from the current scope – something mainline puppet extlookup actually broke recently in a botched commit – but if you put hash or array data in the YAML files I don’t touch the data.

Sample data:

---

country: uk

ntpservers:

- 1.uk.pool.ntp.org

- 2.uk.pool.ntp.org

foo.com:

contact: webmaster@foo.com

docroot: /var/www/foo.com |

---

country: uk

ntpservers:

- 1.uk.pool.ntp.org

- 2.uk.pool.ntp.org

foo.com:

contact: webmaster@foo.com

docroot: /var/www/foo.com

All of this data is accessible using the exact same extlookup function. Configuration of the YAML backend:

---

:parser: YAML

:precedence:

- environment_%{environment}

- common

:yaml:

:datadir: /etc/puppet/extdata |

---

:parser: YAML

:precedence:

- environment_%{environment}

- common

:yaml:

:datadir: /etc/puppet/extdata

Puppet Backend

Nigel Kersten has been working on the proposal of a new data format called the PDL. I had pretty high hopes for the initial targeted feature list but now it seems to have been watered down to a minimal feature set extlookup with a different name and backend.

I implemented the proposed data lookup in classes and modules as a extlookup backend and made it full featured to what you’d expect from extlookup – full configurable lookup orders and custom overrides. Just like we’ve had for years in the CSV version.

Personally I think if you’re going to spend hours creating data that describes your infrastructure you should:

- Not stick it in a language that’s already proven to be bad at dealing with data

- Not stick it in a place where nothing else can query the data

- Not stick it in code that requires code audits for simple data changes – as most change control boards really just won’t see the difference.

- Not artificially restrict what kind of data can go into the data store by prescribing a unmovable convention with no configuration.

When I show the extlookup based ENC I am making I will really show why putting data in the Puppet Language is like a graveyard for useful information and not actually making anything better.

You can configure this backend to behave exactly the way Nigel designed it using this config file:

---

:parser: Puppet

:precedence:

- %{calling_class}

- %{calling_module}

:puppet:

:datasource: data |

---

:parser: Puppet

:precedence:

- %{calling_class}

- %{calling_module}

:puppet:

:datasource: data

Which will lookup data in these classes:

- data::$calling_class

- data::$calling_module

- $calling_class::data

- $calling_module::data

Or you can do better and configure proper precedence which would replace the 1st 2 above with ones for datacenter, country, whatever. The last 2 will always be in the list. An alternative might be:

- data::$customer

- data::$environment

- $calling_class::data

- $calling_module::data

You could just configure this behavior with the extlookup precedence setting. Pretty nice for those of you feeling nostalgic for config.php files as hated by Sysadmins everywhere.

And as you can see you can also configure the initial namespace – data – in the config file.

by R.I. Pienaar | May 4, 2011 | Uncategorized

This is a follow-up post to other posts I’ve done regarding a new breed of monitoring that I hope to create.

I’ve had some time to think about configuration of monitoring. This is a big pain point in all monitoring systems. Many require you configure all your resources, dependencies etc often in text files. Others have API that you can automate against and the worst ones have only a GUI.

In the modern world where we have configuration management this end up being a lot of duplication, your CM system already knows about inter dependencies etc. Your CM’s facts system could know about contacts for a machine and I am sure we could derive a lot of information from these catalogs. Today bigger sites tend to automate the building of monitor config files using their CM systems but it tends to be slow to adapt to network conditions and it’s quite a lot of work.

I spoke a bit about this in the CMDB session at Puppet Camp so thought I’d lay my thoughts down somewhere proper as I’ve been talking about this already.

I’ve previously blogged about using MCollective for monitoring based on discovery. In that post I pointed out that not all things are appropriate to be monitored using this method as you don’t know what is down. There is an approach to solving this problem though. MCollective supports building databases of what should be there – it’s called Registration. By correlating the discovered information with the registered information you can defer what is absent/unknown or down.

Ideally this is as much configuration as I want to have for monitoring mail queue sizes on all my smart hosts:

scheduler.every '1m' do

nrpe("check_mailq", :cf_class => "exim::smarthost")

end |

scheduler.every '1m' do

nrpe("check_mailq", :cf_class => "exim::smarthost")

end

This still leaves a huge problem, I can ask for my a specific service to be monitored on a subset of machines but I cannot defer parent child relationships or know who to notify and this is a huge problem.

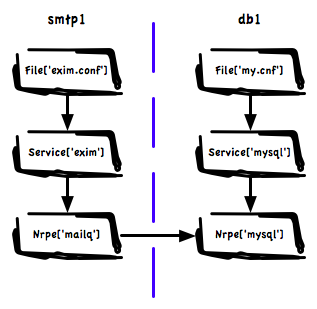

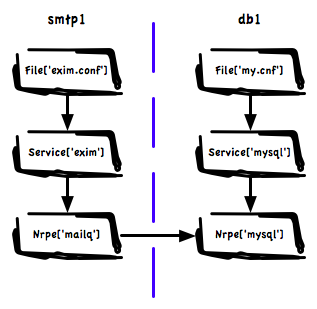

Now as I am using Puppet to declare these services and using Puppet based discovery to select which machines to monitor I would like to declare parent child relationships in Puppet even cross-node ones.

The approach we are currently testing is around loading all my catalogs for all my machines into Neo4J – a purpose built graph database. I am declaring relationships in the manifests and post processing the graph to create the cross node links.

The approach we are currently testing is around loading all my catalogs for all my machines into Neo4J – a purpose built graph database. I am declaring relationships in the manifests and post processing the graph to create the cross node links.

This means we have a huge graph of graphs containing all inter node dependencies. The image shows visually how a small part of this might look. Here we have a Exim service that depends on a database on a different machine because we use a MySQL based Greylisting service.

Using this graph we can answer many questions, among others:

- When doing notifications on a failure in MySQL do not notify about mailq on any of the mail servers

- What other services are affected by a failure on the MySQL Server, if you exposed this to your NOC in a good UI you’ll have to maintain a whole lot less documentation and they know who to call.

- If we are going to do maintenance on the MySQL server what related systems should we schedule downtime on

- What single points of failure exist in the infrastructure

- While planning maintenance on shared resources in big teams with many different groups using databases, find all stake holders

- Create action rule that will shut down all Exim cleanly after failure of the MySQL – mail will spool safely at senders

If we combine this with a rich set of facts we can create a testing framework – perhaps something cucumber based – that let us express infrastructure tests. Platform managers should be able to express baseline design principles the various teams should comply to. These tests are especially important in dynamic environments like ones managed by cloud auto scalers:

- Find all machines with no declared dependencies

- Write a test to check that all shards in a MongoDB cluster has more than 1 member

- Make sure all our MySQL databases are not in the same availability zone

- Find services that depend on each other but that co-habit in the same rack.

- If someone accidentally removes a class from Puppet that manage a DB machine, alert on all failed dependencies that are now unmanaged

And finally we can create automated queries into this database:

- When auto scaling make sure we never end up shutting down machines that would break a dependency

- For an outage on the MySQL server find all related node and their contact information, notify the right people

- When adding nodes using auto scalers make sure we start nodes in different availability zones. If we overlay latency information we can intelligently pick the fastest non-local zone to place a node

The possibilities of pulling in graphs from CM all into one huge queryable data source that understands structure and relationships is really endless. You can see how we have enough information here to derive all the parent child relationships we need for intelligent monitoring.

Ideally Puppet itself would support cross node dependencies but I think that’s some way off. So we have created a hacky solution to declare the relationships now. I think though we need a rich set of relationships. Hard relationships like we have in Puppet now where failure will cause other resources to fail. But we might also have soft relationships that just exist to declare relationships that other systems like monitoring will query.

This is a simple overview of what I have in mind, I expect in the next day or three a follow up post by a co-worker that will show some of the scripts we’ve been working on showing actual queries over this huge graph. We have it working, just polishing things up a bit still.

On a side note, I think one of the biggest design wins in Puppet is that it’s data based. It’s not just a bunch of top-down scripts being run like your old Bash scripts you used to build boxes. Its a directed graph with relationships, that’s queryable and can be used to build other systems, this is a big deal in next generational thinking about systems and I think the above post highlights just a small number of the possibilities this graph brings.

The approach we are currently testing is around loading all my catalogs for all my machines into

The approach we are currently testing is around loading all my catalogs for all my machines into