by R.I. Pienaar | Jan 13, 2010 | Code

Just a quick blog post for those who follow me here to get notified about new releases of MCollective. I just released version 0.4.2 which brings in big improvements for Debian packages, some tweaks to command line and a bug fix in SimpleRPC.

Read all about it at the Release Notes

by R.I. Pienaar | Jan 13, 2010 | Code

Google Code does not provide it’s own usable export methods for projects so we need to make do on our own, there seems no sane way to back up the tickets but for SVN which includes the wiki you can use svnsync.

Here’s a little script to automate this, just give it a PREFIX of your choice and a list of projects in the PROJECTS variable, cron it and it will happily keep your repos in sync.

It outputs its actions to STDOUT so you should add some redirect or redirect it from cron.

#!/bin/bash

PROJECTS=( your project list )

PREFIX="/path/to/backups"

[ -d ${PREFIX} ] || mkdir -p ${PREFIX}

cd ${PREFIX}

for prj in ${PROJECTS[@]}

do

if [ ! -d ${PREFIX}/${prj}/conf ]; then

svnadmin create ${prj}

ln -s /bin/true ${PREFIX}/${prj}/hooks/pre-revprop-change

svnsync init file:///${PREFIX}/${prj} http://${prj}.googlecode.com/svn

fi

svnsync sync file:///${PREFIX}/${prj}

done |

#!/bin/bash

PROJECTS=( your project list )

PREFIX="/path/to/backups"

[ -d ${PREFIX} ] || mkdir -p ${PREFIX}

cd ${PREFIX}

for prj in ${PROJECTS[@]}

do

if [ ! -d ${PREFIX}/${prj}/conf ]; then

svnadmin create ${prj}

ln -s /bin/true ${PREFIX}/${prj}/hooks/pre-revprop-change

svnsync init file:///${PREFIX}/${prj} http://${prj}.googlecode.com/svn

fi

svnsync sync file:///${PREFIX}/${prj}

done

I stongly suggest you backup even your cloud hosted data.

by R.I. Pienaar | Dec 22, 2009 | Code

MCollective is a framework for writing RPC style tools that talk to a cloud of servers, till now doing that has been surprisingly hard for non ruby coders. The reason for this is that I was focussing on getting the framework built and feeling my way around the use cases.

I’ve now spent 2 days working on simplifying actually writing agents and consumers. This code is not released yet – just in SVN trunk – but here’s a taster.

First writing an agent should be simple, here’s a simple ‘echo’ server that takes a message as input and returns it back.

class Rpctest<RPC::Agent

# Basic echo server

def echo_action(request, reply)

raise MissingRPCData, "please supply a :msg" unless request.include?(:msg)

reply.data = request[:msg]

end

end |

class Rpctest<RPC::Agent

# Basic echo server

def echo_action(request, reply)

raise MissingRPCData, "please supply a :msg" unless request.include?(:msg)

reply.data = request[:msg]

end

end

This creates an echo action, does a quick check that a message was received and sends it back. I want to create a few more validators so you can check easily if the data passed to you is sane and secure if you’re doing anything like system() calls with it.

Here’s the client code that calls the echo server 4 times:

#!/usr/bin/ruby

require 'mcollective'

include MCollective::RPC

rpctest = rpcclient("rpctest")

puts "Normal echo output, non verbose, shouldn't produce any output:"

printrpc rpctest.echo(:msg => "hello world")

puts "Flattened echo output, think combined 'mailq' usecase:"

printrpc rpctest.echo(:msg => "hello world"), :flatten => true

puts "Forced verbose output, if you always want to see every result"

printrpc rpctest.echo(:msg => "hello world"), :verbose => true

puts "Did not specify needed input:"

printrpc rpctest.echo |

#!/usr/bin/ruby

require 'mcollective'

include MCollective::RPC

rpctest = rpcclient("rpctest")

puts "Normal echo output, non verbose, shouldn't produce any output:"

printrpc rpctest.echo(:msg => "hello world")

puts "Flattened echo output, think combined 'mailq' usecase:"

printrpc rpctest.echo(:msg => "hello world"), :flatten => true

puts "Forced verbose output, if you always want to see every result"

printrpc rpctest.echo(:msg => "hello world"), :verbose => true

puts "Did not specify needed input:"

printrpc rpctest.echo

This client supports full discovery and all the usual stuff, has pretty –help output and everything else you’d expect in the clients I’ve supplied with the core mcollective. It caches discovery results so above code will do one discovery only and reuse it for the other calls to the collective.

When running you’ll see a twirling status indicator, something like:

This will give you a nice non scrolling indicator of progress and should work well for 100s of machines without spamming you with noise, at the end of the run you’ll get the output.

The printrpc helper function tries its best to print output for you in a way that makes sense on large amounts of machines.

- By default it doesn’t print stuff that succeeds, you do get a overall progress indicator though

- If anything does go wrong, useful information gets printed but only for hosts that had problems

- If you ran the client with –verbose, or forced it to verbose mode output you’ll get a full bit of info of the result from every server.

- It supports flags to modify the output, you can flatten the output so hostnames etc aren’t showed, just a concat of the data.

The script above gives the following output when run in non-verbose mode:

$ rpctest.rb --with-class /devel/

Normal echo output, non verbose, shouldn't produce any output:

Forced verbose output, if you always want to see every result:

dev1.your.com : OK

"hello world"

dev2.your.com : OK

"hello world"

dev3.your.com : OK

"hello world"

Flattened echo output, think combined 'mailq' usecase:

hello world

hello world

hello world

Did not specify needed input:

dev1.your.com : please supply a :msg

dev2.your.com : please supply a :msg

dev3.your.com : please supply a :msg |

$ rpctest.rb --with-class /devel/

Normal echo output, non verbose, shouldn't produce any output:

Forced verbose output, if you always want to see every result:

dev1.your.com : OK

"hello world"

dev2.your.com : OK

"hello world"

dev3.your.com : OK

"hello world"

Flattened echo output, think combined 'mailq' usecase:

hello world

hello world

hello world

Did not specify needed input:

dev1.your.com : please supply a :msg

dev2.your.com : please supply a :msg

dev3.your.com : please supply a :msg

Still some work to do, specifically stats needs a rethink in a scenario where you are making many calls such as in this script.

This will be in mcollective version 0.4 hopefully out early January 2010

by R.I. Pienaar | Dec 1, 2009 | Code

I am pleased to announce the the first actual numbered release of The Marionette Collective, you can grab it from the downloads page.

Till now people wanting to test this had to pull out of SVN directly, I put off doing a release till I had most of the major tick boxes in my mind ticked and till I knew I wouldn’t be making any major changes to the various plugins and such. This release is 0.2.x since 0.1.x was the release number I used locally for my own testing.

This being the first release I fully anticipate some problems and weirdness, please send any concerns to the mailing list or ticketing system.

This has been a while coming, I’ve posted lots on this blog about mcollective, what it is and what it does. For those just joining you want to watch the video on this post for some background.

I am keen to get feedback from some testers, specifically keen to hear thoughts around these points:

- How does the client tools behave on 100s of nodes, I suspect the output format might be useless if it just scrolls and scrolls, I have some ideas about this but need feedback.

- On large amount of hosts, or when doing lots of requests soon after each other, do you notice any replies going missing.

- Feed back about the general design principals, especially how you find the plugin system and what else you might want pluggable. I for example want to make it much easier to add new discovery methods.

- Anything else you can think of

I’ll be putting in tickets on the issue system for future features / fixes I am adding so you can track there to get a feel for the milestones toward 0.3.x.

Thanks goes to the countless people who I spoke to in person, on IRC and on Twitter, thanks for all the retweets and general good wishes. Special thanks also to Chris Read who made the debian package code and fixed up the RC script to be LSB compliant.

by R.I. Pienaar | Nov 18, 2009 | Code

As part of rolling out mcollective you need to think about security. The various examples in the quick start guide and on this blog has allowed all agents to talk to all nodes all agents. The problem with this approach is that should you have untrusted users on a node they can install the client applications and read the username/password from the server config file and thus control your entire architecture.

Since revision 71 of trunk the structure of messages has changed to be compatible with ActiveMQ authorization structure, I’ve also made the structure of the message targets configurable. The new default format is compatible with ActiveMQ wildcard patterns and so we can now do fine grained controls over who can speak to what.

General information about ActiveMQ Security can be found on their wiki.

The default message targets looks like this:

/topic/mcollective.agentname.command

/topic/mcollective.agentname.reply |

/topic/mcollective.agentname.command

/topic/mcollective.agentname.reply

The nodes only need read access to the command topics and only need write access to the reply topics. The examples below also give them admin access so these topics can be created dynamically. For simplicity we’ll wildcard the agent names, you could go further and limit certain nodes to only run certain agents. Adding these controls effectively means anyone who gets onto your node will not be able to write to the command topics and so will not be able to send commands to the rest of the collective.

There’s one special case and that’s the registration topic, if you want to enable the registration feature you should give the nodes access to write on the command channel for the registration agent. Nothing should reply on the registration topic so you can limit that in the ActiveMQ config.

We’ll let mcollective log in as the mcollective user, create a group called systemusers, we’ll then give the systemsuser group access to run as a typical registration enabled mcollective node.

The rip user is a mcollective admin and can create commands and receive replies.

First we’ll create users and the groups.

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="mcollective" password="pI1SkjRi" groups="mcollectiveusers,everyone"/>

<authenticationUser username="rip" password="foobarbaz" groups="admins,everyone"/>

</users>

</simpleAuthenticationPlugin> |

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="mcollective" password="pI1SkjRi" groups="mcollectiveusers,everyone"/>

<authenticationUser username="rip" password="foobarbaz" groups="admins,everyone"/>

</users>

</simpleAuthenticationPlugin>

Now we’ll create the access rights:

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry queue="mcollective.>" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.>" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.*.reply" write="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="mcollective.registration.command" write="mcollectiveusers" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="mcollective.*.command" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="ActiveMQ.Advisory.>" read="everyone,all" write="everyone,all" admin="everyone,all"/>

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin> |

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry queue="mcollective.>" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.>" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.*.reply" write="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="mcollective.registration.command" write="mcollectiveusers" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="mcollective.*.command" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="ActiveMQ.Advisory.>" read="everyone,all" write="everyone,all" admin="everyone,all"/>

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin>

You could give just the specific node that runs the registration agent access to mcollective.registration.command to ensure the secrecy of your node registration.

Finally the nodes need to be configured, the server.cfg should have the following at least:

topicprefix = /topic/mcollective

topicsep = .

plugin.stomp.user = mcollective

plugin.stomp.password = pI1SkjRi

plugin.psk = aBieveenshedeineeceezaeheer |

topicprefix = /topic/mcollective

topicsep = .

plugin.stomp.user = mcollective

plugin.stomp.password = pI1SkjRi

plugin.psk = aBieveenshedeineeceezaeheer

For my clients I can use the ability to configure the user details in my shell environment:

export STOMP_USER=rip

export STOMP_PASSWORD=foobarbaz

export STOMP_SERVER=stomp1

export MCOLLECTIVE_PSK=aBieveenshedeineeceezaeheer |

export STOMP_USER=rip

export STOMP_PASSWORD=foobarbaz

export STOMP_SERVER=stomp1

export MCOLLECTIVE_PSK=aBieveenshedeineeceezaeheer

And finally the rip user when logged into a shell with these variables have full access to the various commands. You can now give different users access to the entire collective or go further and give a certain admin user access to only run certain agents by limiting the command topics they have access to. Doing the user and password settings in shell environments means it’s not kept in any config file in /etc/ for example.

by R.I. Pienaar | Nov 10, 2009 | Code

As part of deploying MCollective + ActiveMQ instead of my old Spread based system I need to figure out a multi location setup, the documentation says I’d possible so I thought I better get down and figure it out.

In my case I will have per-country ActiveMQ’s, I’ve had the same with Spread in the past and it’s proven reliable enough for my needs, each ActiveMQ will carry 30 or so nodes.

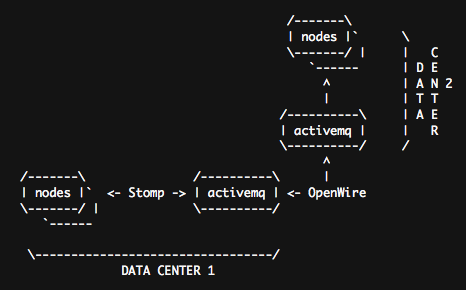

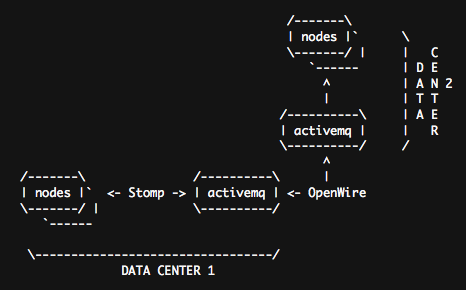

ActiveMQ Cluster

The above image shows a possible setup, you can go much more complex, you can do typical hub-and-spoke setups, a fully meshed setup or maybe have a local one in your NOC etc, ActiveMQ is clever enough not to create message loops or storms if you create loops so you can build lots of resilient routes.

ActiveMQ calls this a Network of Brokers and the minimal docs can be found here. They also have docs on using SSL for connections, you can encrypt the inter DC traffic using that.

I’ll show sample config below of the one ActiveMQ node, the other would be identical except for the IP of it’s partner. The sample uses authentication between links as I think you really should be using auth everywhere.

<broker xmlns="http://activemq.org/config/1.0" brokerName="your-host" useJmx="true"

dataDirectory="${activemq.base}/data">

<transportConnectors>

<transportConnector name="openwire" uri="tcp://0.0.0.0:6166"/>

<transportConnector name="stomp" uri="stomp://0.0.0.0:6163"/>

</transportConnectors> |

<broker xmlns="http://activemq.org/config/1.0" brokerName="your-host" useJmx="true"

dataDirectory="${activemq.base}/data">

<transportConnectors>

<transportConnector name="openwire" uri="tcp://0.0.0.0:6166"/>

<transportConnector name="stomp" uri="stomp://0.0.0.0:6163"/>

</transportConnectors>

These are basically your listeners, we want to accept Stomp and OpenWire connections.

Now comes the connection to the other ActiveMQ server:

<networkConnectors>

<networkConnector name="amq1-amq2" uri="static:(tcp://192.168.1.10:6166)" userName="amq" password="Afuphohxoh"/>

</networkConnectors> |

<networkConnectors>

<networkConnector name="amq1-amq2" uri="static:(tcp://192.168.1.10:6166)" userName="amq" password="Afuphohxoh"/>

</networkConnectors>

This sets up a connection to the remote server at 192.168.1.10 using username amq and password Afuphohxoh. You can also designate failover and backup links, see the docs for samples. If you’re building lots of servers talking to each other you should give every link on every server a unique name. Here I called it amq1_amq2 for comms from a server called amq1 to amq2, this is a simple naming scheme that ensures things are unique.

Next up comes the Authentication and Authorization bits, this sets up the amq user and an mcollective user that can use the topic /topic/mcollective.*. More about ActiveMQ’s security model can be found here.

<plugins>

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="amq" password="Afuphohxoh" groups="admins,everyone"/>

<authenticationUser username="mcollective" password="pI1jweRV" groups="mcollectiveusers,everyone"/>

</users>

</simpleAuthenticationPlugin>

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry queue=">" write="admins" read="admins" admin="admins" />

<authorizationEntry topic=">" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.>" write="mcollectiveusers" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="ActiveMQ.Advisory.>" read="everyone,all" write="everyone,all" admin="everyone,all"/>

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin>

</plugins>

</broker> |

<plugins>

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="amq" password="Afuphohxoh" groups="admins,everyone"/>

<authenticationUser username="mcollective" password="pI1jweRV" groups="mcollectiveusers,everyone"/>

</users>

</simpleAuthenticationPlugin>

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry queue=">" write="admins" read="admins" admin="admins" />

<authorizationEntry topic=">" write="admins" read="admins" admin="admins" />

<authorizationEntry topic="mcollective.>" write="mcollectiveusers" read="mcollectiveusers" admin="mcollectiveusers" />

<authorizationEntry topic="ActiveMQ.Advisory.>" read="everyone,all" write="everyone,all" admin="everyone,all"/>

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin>

</plugins>

</broker>

If you setup the other node with a setup connecting back to this one you will have bi-directional messages working correctly.

You can now connect your MCollective clients to either one of the servers and everything will work as if you had only one server. ActiveMQ servers will attempt reconnects regularly if the connection breaks.

You can also test using my generic stomp client that I posted in the past