by R.I. Pienaar | Jun 24, 2009 | Front Page

As mentioned earlier I’ll be going to South Africa for 2 weeks soon, I have a few talks lined up.

I’ll be talking at the Gauteng LUG on the 1st of July 2009 and I will give the same talk in Cape Town LUG the following Thursday – 9th of July.

The talks will be about Configuration Management and about Puppet, both subjects are pretty huge subjects so I won’t pretend to cover either in depth.

I’ll just run through some problems teams who use Linux or Unix have encountered and how CM systems can be used to help with those problems.

I’ll work through a sample of installing, configuring and starting Apache using Puppet. Finally I’ll show how company standards can be encapsulated in simple re-usable Puppet logic to easily roll out large amounts of vhosts according to company standards with minimal effort. The demonstration will be done using 2 virtual machines, one being managed by the other using Puppet.

Ideally the talks won’t be just me standing up and going on for x minutes, I’ll invite participation, answer any questions etc.

by R.I. Pienaar | Jun 16, 2009 | Front Page

I’ll be heading to South Africa for roughly 2 weeks end of June to early July. I’ll be visiting Johannesburg, Potchefstroom and Cape Town.

I’d be keen to hear from anyone interested in having some hours from me for consultation, it’s not really a lot of time to spend with people so I am not sure what would be viable time spent.

I think it might be worthwhile from companies who are considering adopting Puppet for configuration management to meet with me, I’d definitely be able to help you decide if it’s the right tool for you or even if you should consider configuration management as a whole.

I might also be open to having a short talk about Puppet if there are any LUGs or something that would be interested in hearing about CM or Puppet.

by R.I. Pienaar | May 28, 2009 | Front Page

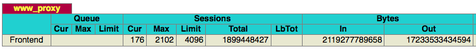

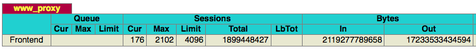

Load Balancers are some of the most expensive bits of equipment small to medium size sites are likely to buy, even more expensive than database servers.

Since I help a number of smaller and young startups a good Open Source load balancer is essential, I use HAProxy for this purpose.

HAProxy is a high performance non threaded load balancer, it supports a lot of really excellent features like regular expression based logic to route certain types of requests to different backend servers, session tracking using cookies or URL parts and has extensive documentation.

Getting a full redundant set of load balancers going with it requires the help of something like Linux-HA which I use extensively for this purpose, the combination of HAProxy and Linux-HA gives you a full active-passive cluster with failover capabilities that really does work a charm.

I recently had to reload a HAProxy instance after about a 100 day uptime, its performance stats were 1.8 billion requests, 15TB out and just short of 2TB in

Worth checking out HAProxy before shelling out GBP15 000 for 2 x hardware load balancers.

by R.I. Pienaar | May 4, 2009 | Front Page, Usefull Things

I thought its high time I get to spend some time with IPv6 so I signed up for a static tunnel from sixxs.net, apart from taking some time it’s a fairly painless process to get going.

I chose a static tunnel since I am just 9ms from one of their brokers and my machine is up all the time anyway, they have some docs on how to get RedHat machines talking to them but it was not particularly accurate, this is what I did:

You’ll get a mail from them listing your details, something like this:

Tunnel Id : T21201

PoP Name : dedus01 (de.speedpartner [AS34225])

Your Location : Gunzenhausen, de

SixXS IPv6 : 2a01:x:x:x::1/64

Your IPv6 : 2a01:x:x:x::2/64

SixXS IPv4 : 91.184.37.98

Tunnel Type : Static (Proto-41)

Your IPv4 : 78.x.x.x |

Tunnel Id : T21201

PoP Name : dedus01 (de.speedpartner [AS34225])

Your Location : Gunzenhausen, de

SixXS IPv6 : 2a01:x:x:x::1/64

Your IPv6 : 2a01:x:x:x::2/64

SixXS IPv4 : 91.184.37.98

Tunnel Type : Static (Proto-41)

Your IPv4 : 78.x.x.x

Using this you can now configure your CentOS machine to bring the tunnel up, you need to edit these files:

/etc/sysconfig/network:

NETWORKING_IPV6=yes

IPV6_DEFAULTDEV=sit1 |

NETWORKING_IPV6=yes

IPV6_DEFAULTDEV=sit1

/etc/sysconfig/network-scripts/ifcfg-sit1

DEVICE=sit1

BOOTPROTO=none

ONBOOT=yes

IPV6INIT=yes

IPV6_TUNNELNAME=”sixxs”

IPV6TUNNELIPV4=”91.184.37.98″

IPV6TUNNELIPV4LOCAL=”78.x.x.x”

IPV6ADDR=”2a01:x:x:x::2/64″

IPV6_MTU=”1280″

TYPE=sit |

DEVICE=sit1

BOOTPROTO=none

ONBOOT=yes

IPV6INIT=yes

IPV6_TUNNELNAME=”sixxs”

IPV6TUNNELIPV4=”91.184.37.98″

IPV6TUNNELIPV4LOCAL=”78.x.x.x”

IPV6ADDR=”2a01:x:x:x::2/64″

IPV6_MTU=”1280″

TYPE=sit

Just replace the values from your email into the files above, once you have this in place reboot or restart your networking and you should see something like this:

sit1 Link encap:IPv6-in-IPv4

inet6 addr: 2a01:x:x:x::2/64 Scope:Global

inet6 addr: fe80::4e2f:c3c6/128 Scope:Link

UP POINTOPOINT RUNNING NOARP MTU:1480 Metric:1

RX packets:9796 errors:0 dropped:0 overruns:0 frame:0

TX packets:7301 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:7181061 (6.8 MiB) TX bytes:1277642 (1.2 MiB) |

sit1 Link encap:IPv6-in-IPv4

inet6 addr: 2a01:x:x:x::2/64 Scope:Global

inet6 addr: fe80::4e2f:c3c6/128 Scope:Link

UP POINTOPOINT RUNNING NOARP MTU:1480 Metric:1

RX packets:9796 errors:0 dropped:0 overruns:0 frame:0

TX packets:7301 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:7181061 (6.8 MiB) TX bytes:1277642 (1.2 MiB)

% ping6 -c 3 -n noc.sixxs.net

PING noc.sixxs.net(2001:838:1:1:210:dcff:fe20:7c7c) 56 data bytes

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=0 ttl=57 time=20.2 ms

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=1 ttl=57 time=28.4 ms

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=2 ttl=57 time=20.1 ms

— noc.sixxs.net ping statistics —

3 packets transmitted, 3 received, 0% packet loss, time 2008ms

rtt min/avg/max/mdev = 20.181/22.934/28.406/3.869 ms, pipe 2 |

% ping6 -c 3 -n noc.sixxs.net

PING noc.sixxs.net(2001:838:1:1:210:dcff:fe20:7c7c) 56 data bytes

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=0 ttl=57 time=20.2 ms

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=1 ttl=57 time=28.4 ms

64 bytes from 2001:838:1:1:210:dcff:fe20:7c7c: icmp_seq=2 ttl=57 time=20.1 ms

— noc.sixxs.net ping statistics —

3 packets transmitted, 3 received, 0% packet loss, time 2008ms

rtt min/avg/max/mdev = 20.181/22.934/28.406/3.869 ms, pipe 2

Since this is a remote machine it took me some time to figure out how to get browsing going through it, but once I reconnected my SSH SOCKS tunnel it immediately became IPv6 aware and were happily routing me to sites like ipv6.google.com. To do this just run from your desktop:

Now set your firefox network.proxy.socks_remote_dns setting to true in about:config, and point your browser at localhost:1080 as a socks proxy, your SSH should now work as a perfectly effective ipv4-to-6 gateway. You can test it by browsing to either the sixxs.net homepage or ipv6.google.com – watch out for the special google logo.

by R.I. Pienaar | Apr 4, 2009 | Front Page

CentOS 5.3 was released on the 1st of April, I’ve since updated a whole lot of my machines to this version and been very happy.

There are a few gotchas, mostly well covered in the release notes, the only other odd thing I found was that /etc/snmp/snmpd.options has now moved to /etc/sysconfig/snmpd.options ditto for snmptrapd.options. It’s a bit of a weird change, while it makes the SNMPD config a bit more like the rest of the RedHat system, it still is different, you’d think based on all the other files in /etc/sysconfig that this one would have been called /etc/sysconfig/snmpd rather than have the .options bit tacked on.

Other changes that I noticed is that Xen is behaving a lot better now on suspends, if I reboot a dom0 and then bring it back up the domU’s resume where they were and unlike the past the clocks do not go all over the place, in fact I’ve even seen SSH sessions stay up between reboots. Though SNMP still sometimes stop working after resume.

The general overall look of the distribution is much better, the artwork has been redone through out and now forms a nice cohesive look and feel through out.

While investigating the cause of the /etc/snmp/snmpd.options file mysteriously going missing I once again had the miss fortune of having to deal with #centos on freenode. It really is one of the most hostile channels I’ve come across in the opensource world, people are just outright arseholes, every one including the project leaders.

Immediately assuming you have no clue, don’t know what you’re talking about and generally just treating everyone like shit who dare suggest something is broken with the usual ‘works for me’ ‘read the docs’ or ‘its in the release notes’ or ‘looking at the source will not help’ style responses to every question. When as it turns out every one of those remarks were just plain wrong. No it didn’t work for them, their files also got moved by the installer. No it was not in the docs or release notes. No looking at the source would have helped a lot more than they did because I would have then been able to see for myself that the post install of the RPM moves the files etc. It took literally over a hour to get even one of them to actually make the effort to be helpful compared to about 2 minutes it would have taken if the SRPMs were available at release time.

I think they’re really doing the project a big disservice by not sorting out the irc channel in fact they actively defend and even promote the hostility shown there, in contrast to the puppet irc channel for instance it really is a barbaric bit of the 3rd world.

by R.I. Pienaar | Jan 26, 2009 | Front Page, Usefull Things

I’ve previously mentioned the really great syscfg integrated IPSEC on RedHat Linux here but thought I’d now show a real world example of a Cisco ASA and a RedHat machine talking since it is not totally obvious and it is not something I seen specifically documented anywhere using Google.

A quick recap: RedHat now lets you build IPSEC VPNs using just simple ifcfg-eth0 style config files.

I’ll quickly show both sides of the config to build a site to site VPN, Site A is a Linux machine with a real IP address while Site B is a Cisco ASA with a private network behind it, the Linux machine has this in /etc/sysconfig/network-scripts/ifcfg-ipsec1:

TYPE=IPSEC

ONBOOT=yes

IKE_METHOD=PSK

SRCGW=1.2.3.4

DSTGW=2.3.4.5

SRCNET=1.2.3.4/32

DSTNET=10.1.1.0/24

DST=2.3.4.5

AH_PROTO=none |

TYPE=IPSEC

ONBOOT=yes

IKE_METHOD=PSK

SRCGW=1.2.3.4

DSTGW=2.3.4.5

SRCNET=1.2.3.4/32

DSTNET=10.1.1.0/24

DST=2.3.4.5

AH_PROTO=none

The pre-shared key is in /etc/sysconfig/network-scripts/keys-ipsec1 as per the RedHat documentation.

The Cisco ASA does not support AH so the big deal here is to disable AH which turns out to be the magic knob to tweak here to make it work.

In this case the Linux Server on Site A has the IP address 1.2.3.4 while the ASA is running on 2.3.4.5, the private network at Site B is 10.1.1.0/24.

On the Cisco the relevant lines of config are:

object-group network siteb_to_sitea_local_hosts

description Site B to Site A VPN Local hosts

network-object 10.1.1.0 255.255.255.0

object-group network siteb_to_sitea_remote_hosts

description Site B to Site A VPN Remote Hosts

network-object 1.2.3.4 255.255.255.255

access-list siteb_to_sitea_vpn extended permit ip object-group siteb_to_sitea_local_hosts object-group siteb_to_sitea_remote_hosts

access-list inside_nat_bypass extended permit ip object-group siteb_to_sitea_local_hosts object-group siteb_to_sitea_remote_hosts

nat (inside) 0 access-list inside_nat_bypass

crypto map outside_map 20 match address siteb_to_sitea_vpn

crypto map outside_map 20 set pfs

crypto map outside_map 20 set peer 1.2.3.4

crypto map outside_map 20 set transform-set ESP-3DES-SHA

crypto map outside_map 20 set security-association lifetime seconds 3600

crypto map outside_map 20 set security-association lifetime kilobytes 4608000

crypto map outside_map interface outside

crypto isakmp enable outside

crypto ipsec transform-set ESP-3DES-SHA esp-3des esp-sha-hmac

crypto isakmp policy 20

authentication pre-share

encryption 3des

hash sha

group 2

lifetime 28800

tunnel-group 1.2.3.4 type ipsec-l2l

tunnel-group 1.2.3.4 ipsec-attributes

pre-shared-key secret |

object-group network siteb_to_sitea_local_hosts

description Site B to Site A VPN Local hosts

network-object 10.1.1.0 255.255.255.0

object-group network siteb_to_sitea_remote_hosts

description Site B to Site A VPN Remote Hosts

network-object 1.2.3.4 255.255.255.255

access-list siteb_to_sitea_vpn extended permit ip object-group siteb_to_sitea_local_hosts object-group siteb_to_sitea_remote_hosts

access-list inside_nat_bypass extended permit ip object-group siteb_to_sitea_local_hosts object-group siteb_to_sitea_remote_hosts

nat (inside) 0 access-list inside_nat_bypass

crypto map outside_map 20 match address siteb_to_sitea_vpn

crypto map outside_map 20 set pfs

crypto map outside_map 20 set peer 1.2.3.4

crypto map outside_map 20 set transform-set ESP-3DES-SHA

crypto map outside_map 20 set security-association lifetime seconds 3600

crypto map outside_map 20 set security-association lifetime kilobytes 4608000

crypto map outside_map interface outside

crypto isakmp enable outside

crypto ipsec transform-set ESP-3DES-SHA esp-3des esp-sha-hmac

crypto isakmp policy 20

authentication pre-share

encryption 3des

hash sha

group 2

lifetime 28800

tunnel-group 1.2.3.4 type ipsec-l2l

tunnel-group 1.2.3.4 ipsec-attributes

pre-shared-key secret

Using these specific phase 1 and phase 2 parameters – timings, pfs, crypto etc – means that it will match up with the default out-the-box parameters as per /etc/racoon/racoon.conf thereby minimizing the amount of tweaking needed on the RedHat machine

All that is needed now is to start the VPN using /etc/sysconfig/network-scripts/ifup ifcfg-ipsec1 and you should be able to communicate between your nodes.