by R.I. Pienaar | Mar 9, 2011 | Uncategorized

While developing agents for The Marionette Collective it’s often quite difficult to debug weird behavior in agents, I wrote a debugger that makes development easier.

Agents tend to run in a thread inside a daemon connected through middleware, this all makes it really hard. The debugger is a harness that runs an agent standalone allowing you to trace calls, set breakpoints and all the other goodies you expect in a good debugger.

To use it you need to grab the source from GitHub and also install the ruby-debug Gem.

Since I am using the normal ruby-debug debugger for the hard work you can read any of the many tutorials and screencasts about its use and apply what you lean in this environment, the screencast below shows you a quick tour through the features and usage. The screencast is high quality so feel free to full-screen it.

As the screencast mention there’s still some tweaking to do so that the ruby-debug will notice code changes without restarting and I might tweak the trace option a bit. Already though this is a huge improvement for anyone writing an Agent.

by R.I. Pienaar | Mar 5, 2011 | Uncategorized

We’ll be releasing The Marionette Collective version 1.1.3 on Monday which will bring with it a major new feature called Subcollectives. This feature lets you partition your collective into multiple isolated broadcast zones much like a VLAN does to a traditional network. Each node can belong to one or many collectives at the same time.

An interesting side effect of this feature is that you can create subcollectives to improve security of your network. I’ll go through a process here for providing untrusted 3rd parties access to just a subset of your servers.

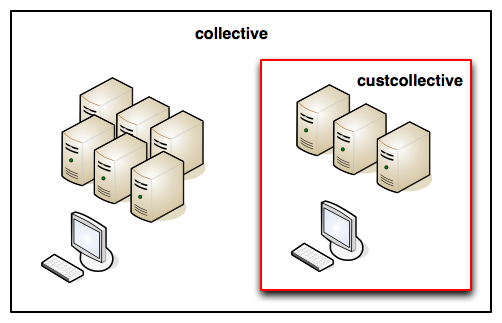

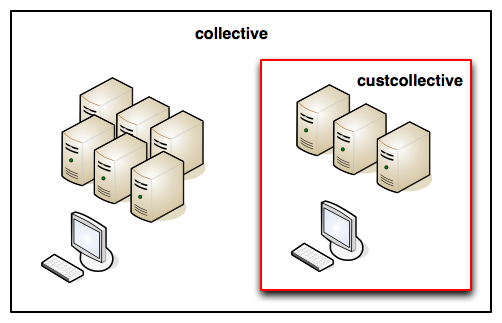

The image above demonstrates a real world case where a customer wanted to control their machines using the abilities exposed by MCollective on a network hosting servers for many customers.

The customer has a CMS that creates accounts on his backend systems, sometimes he detects abuse from a certain IP and need to be able to block that IP from all his customer facing systems immediately. We did not want to give the CMS access to SSH as root to the servers to we provided a MCollective Agent that expose this ability using SimpleRPC.

Rather than deploy a new collective using different daemons we use the new Subcollectives features to let the customer machines belong to a new collective called custcollective while still belonging to the existing collective. We then restrict the user at the middleware layer and set his machines up to allow him access to them via the newly created collective.

To properly secure this setup we give the customer their own username on the ActiveMQ server and secure it so it can only communicate with his subcollective:

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="customer" password="secret" groups="customer,everyone"/>

</users>

</simpleAuthenticationPlugin>

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry topic="custcollective.>" write="mcollectiveusers,customer" read="mcollectiveusers,customer" admin="mcollectiveusers,genzee" />

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin> |

<simpleAuthenticationPlugin>

<users>

<authenticationUser username="customer" password="secret" groups="customer,everyone"/>

</users>

</simpleAuthenticationPlugin>

<authorizationPlugin>

<map>

<authorizationMap>

<authorizationEntries>

<authorizationEntry topic="custcollective.>" write="mcollectiveusers,customer" read="mcollectiveusers,customer" admin="mcollectiveusers,genzee" />

</authorizationEntries>

</authorizationMap>

</map>

</authorizationPlugin>

This sets up the namespace for the custcollective and give the user access to it, we only give him access to his collective and no others.

Next we have to configure the customers servers to belong to the new collective in addition to the existing collective using their server.cfg:

collectives = collective,custcollective

main_collective = collective |

collectives = collective,custcollective

main_collective = collective

And finally we give the customer a client.cfg that limits him to this collective:

collectives = custcollective

main_collective = custcollective

plugin.stomp.pool.user1 = customer

plugin.stomp.pool.password1 = secret |

collectives = custcollective

main_collective = custcollective

plugin.stomp.pool.user1 = customer

plugin.stomp.pool.password1 = secret

Due to the restrictions on the middleware level even if the customer were to specify other collective names in his client.cfg he simply would not be able to communicate with those hosts.

We now setup Authorization to give the user access to just the agents and actions you authorize him to communicate with. A sample policy file using the Action Policy Authorization Plugin can be seen below, it lets the user use the iptables agent block action on just his machines while allowing me to use all actions on all machines:

policy default deny

allow cert=rip * * *

allow cert=customer block customer=acme * |

policy default deny

allow cert=rip * * *

allow cert=customer block customer=acme *

And finally thanks to the Auditing built into MCollective the clients actions are fully logged:

2011-03-05T21:03:52.598552+0000: reqid=ebf3c01fdaa92ce9f4137ad8ff73336b:

reqtime=1299359032 caller=cert=customer@some.machine agent=iptables

action=block data={:ipaddr=>"196.xx.xx.146"} |

2011-03-05T21:03:52.598552+0000: reqid=ebf3c01fdaa92ce9f4137ad8ff73336b:

reqtime=1299359032 caller=cert=customer@some.machine agent=iptables

action=block data={:ipaddr=>"196.xx.xx.146"}

The customer is happy as he was able to build a real time IDS that reacts to events throughout his network, he can interact with it from CLI, automated IDS and even his web systems.

Using this technique and combining it with the existing AAA in MCollective we as an ISP were able to expose a subset of machines to an untrusted 3rd party in a way that is safe, secure and audited without having to give the 3rd party elevated or even shell access to these machines.

by R.I. Pienaar | Feb 26, 2011 | Uncategorized

Amazon is keeping things ticking along nicely by constantly adding features to their offerings. I’m almost more impressed at the pace and diversity of innovation than the final solution.

During the week they announced AWS CloudFormation. Rather than add to the already unbearable tedium of “it’s not a Chef or Puppet killer” blog posts I thought I’d just go ahead and do something with it.

Till now people who wanted to evaluate MCollective had to go through a manual process of starting first the ActiveMQ instance, gathering some data and then start a number of other instances supplying user data for the ActiveMQ instance. This was by no means a painful solution but CloudFormation can make this much better.

I’ve created a new demo using CloudFormation and recorded a video showing how to use it etc, you can read all about it here.

The demo has been upgraded with the latest production MCollective version that came out recently. This collective has the same features as the previous demos, registration, package and a few other bits.

Impact

Overall I think this is a very strong entry into the market, it needs work still but its a step in the right direction. I dislike typing JSON about as much as I dislike typing XML but this isn’t an Amazon problem to fix – that’s what frameworks and API clients are for.

It’s still markup aimed at machines and the following pretty much ensures user error as much as XML does:

"UserData" : {

"Fn::Base64" : {

"Fn::Join" : [ ":", [

"PORT=80",

"TOPIC=", {

"Ref" : "logical name of an AWS::SNS::Topic resource"

},

"ACCESS_KEY=", { "Ref" : "AccessKey" },

"SECRET_KEY=", { "Ref" : "SecretKey" } ]

]

}

}, |

"UserData" : {

"Fn::Base64" : {

"Fn::Join" : [ ":", [

"PORT=80",

"TOPIC=", {

"Ref" : "logical name of an AWS::SNS::Topic resource"

},

"ACCESS_KEY=", { "Ref" : "AccessKey" },

"SECRET_KEY=", { "Ref" : "SecretKey" } ]

]

}

},

CloudFormation represents a great opportunity for the Framework builders like Puppet Labs and Opscode as it can enhance their offerings by a long way especially for Puppet a platrform wide view is something that is very desperately needed – not to mention basic Cloud integration.

Tools like Fog and its peers will no doubt soon support this feature so will knife as a side effect.

Issues

I have a few issues with the current offering, it seems a bit first-iteration like and I have no doubt things will improve. The issues I have are fairly simple ones and I am surprised they weren’t addressed in the first release really.

Fn::GetAtt is too limited

You can gain access to properties of other resources using the Fn::GetAtt function, for instance say you created a RDS database and need its IP address:

"Fn::GetAtt" : [ "MyDB" , "Endpoint.Address"] |

"Fn::GetAtt" : [ "MyDB" , "Endpoint.Address"]

This is pretty great but unfortunately the list of resources it can access is extremely limited.

For example, given an EC2 image you might want to find out it’s private IP address – what if it’s offering an internal service like my ActiveMQ instances does to MCollective? You can’t get access to this the only attribute that is available is the public IP address. This is a problem, if you talk to that you will get billed even between your own instances! So you have to do a PTR lookup on the IP and then use the public DNS name or do another lookup and rely on the Amazon split horizon DNS to direct you to the private IP. This is unfortunate since it ads a lot of error prone steps to the process.

This situation is repeated for more or less every resource that CloudFormation can manage. Hopefully this situation will improve pretty rapidly.

Lack of options

When creating a stack it seems obvious you might want to create 2 webservers, at the moment – unless I am completely blind and missed something – you have to specify each instance completely rather than have a simple property that instructs it to make multiples of the same resource.

This seems a very obvious omission, it’s such a big one that I am sure I just missed something in the documentation.

Some stuff just don’t work

I’ll preface this by again saying I might just not be getting something. You’re supposed to be able to create a Security Group that allows all machines in another Security Group to communicate with it.

The documentation says you can set SourceSecurityGroupName and then you don’t have to set SourceSecurityGroupOwnerId.

Unfortunately try as I might I can’t get it to stop complaining that SourceSecurityGroupOwnerId isn’t set when I set SourceSecurityGroupName which is just crazy talk since there’s no way to look up the current Owner ID in any GetAtt property.

Additionally it claims the FromPort and ToPort properties are compulsory but all the docs on the APIs says you cannot set those if you set the SourceSecurityGroupName in the individual APIs

I’ve given up making proper security group the way I want them for the purpose of my demo but I am fairly sure this is a bug.

Slow

If you have a Stack with 10 resources it will do some ordering based on your ref’s, for example if 5 other instances requires the public IP of a 6th it will create the right one first.

Unfortunately even though there then is no reason for things to happen in a given order it will just sit and create the resources one by one in a serial manner rather than start all the requests in parallel.

With even a reasonably complex Stack this can be very tedious, starting a 6 node Stack can take 15 minutes easily. It then also shuts the stack down in series so just booting and shutting it wastes 30 minutes on 6 nodes!

Definitely some room for improvement here, I’d like to give developers a self service environment they won’t enjoy sitting waiting for 1/2 hour before they can get going.

Shoddy Documentation

I’ve come across a number of documentation inconsistencies that can be really annoying. Little things like PublicIP vs PublicIp that makes writing the JSON files a error prone cycle of try and try and try. This is a very easy thing to fix it’s only worth mentioning in relation to the next point.

Given how slow it is to create/tear down stacks if you got something wrong this problem can really hurt your productivity.

The AWS Console

Given that the docs are a bit bad you’ll be spending some time in the AWS console or the CLI tool. I didn’t try to the ClI tools in this case but the console is not great at all.

I am seeing weird things where I upload a new template into a new Formation and it gets an older one at first. Looking at how it works – saving the JSON to a bucket under your name all with unique names I chalked this down to user error. But then I tried harder not to mess it up and it does seem to keep happening.

I’m still not quite ready to blame the AWS console for this though, might be some browser caching or something else to blame, either way it makes the whole thing a lot more frustrating to use.

What I am 100% ready to blame it for is just general unfriendlyness with error messages and I am guessing the feedback you’d get from the API is equally bad:

"Invalid template resource property IP" |

"Invalid template resource property IP"

Needless to say the string ‘IP’ isn’t even anywhere to be found in my template.

When I eventually tracked this down it was due to the caching mentioned above working on an old JSON file and a user error on my side, but I didn’t see it because it wasn’t clear it was using a old JSON file and not the one I set on my browser upload.

So my recommendation is just not to use the AWS console while creating stacks, it’s an end user tool and excels at that, when building stacks use the CLI as it includes tools to do local validations of the JSON and avoid annoying browser caches etc.

by R.I. Pienaar | Jan 6, 2011 | Uncategorized

When developing plugins for MCollective testing can be a pain since true testing would mean you need a lot of virtual machines and so forth.

I wrote something called the MCollective Collective Builder that assist in starting up a Stomp server and any number of mcollectived processes on one machine which would let you develop and test the parallel model without needing many machines.

I’ve found it to be very useful in developing plugins, I recently wrote a new encrypting security plugin and the entire development was done over 10 nodes all on a single 512MB RAM single CPU virtual machine with no complex plugin synchronization issues etc.

You can see how to use it below, as usual it’s best to view the video full screen.

by R.I. Pienaar | Dec 15, 2010 | Uncategorized

Today after I threatened to do this since around September or even earlier we released version 1.0.0 of The Marionette Collective. This is quite a big milestone for the RPC framework, I believe it is now ready for prime time as a real time RPC framework accessible from many different systems. Facilitating code reuse and large scale parallel execution.

I started working on the SimpleRPC framework – and the 0.4 series – around 20th of December 2009, 10 releases later its done and that became 1.0.0.

1.1.0 will bring a number of new features – the SimpleRPC framework will be improved with delayed and backgrounded jobs for example. There’s a published roadmap for what is coming – and some things like the Java integration isn’t on this list even.

The EC2 based demo system has also had a much needed refresh upgrading it from 0.4.4 to 1.0.0 so you can go have a play with MCollective using that without all the hassle of deploying stuff.

With the support and extra time afforded by the Puppet Labs acquisition the pace of development will increase but I can also now devote resources to maintaining a production release so there will be a 1.0.x branch that gets bugs and packaging improvements only while we work on the rest of the new and shiny.