Scheduling Puppet runs is a hard problem, you either run the daemon or run it through cron, both have drawbacks. There’s been some discussion about decoupling this or to improve the remote control abilities of Puppet, this is my entry into that discussion.

Running the daemon it’s all about the memory problems of pretty much everything involved, you also suffer if a dom0 reboots as the 20 domU’s on it will pile up and cause huge concurrency runs.

Running from cron you have problems scheduling it nicely, the simplest approach is just to sleep a random period of time, but this means clients don’t always run a predictable time and you still get concurrency issues.

I’ve written an mcollective based Command and Control for Puppet that launches Puppet runs. The aim is to spread the CPU load on my masters out evenly to ensure I can use lower spec machines for masters. Or in my case I can re-use my master machines as monitoring and middleware nodes.

It basically has these features:

- Discover the list of nodes to manage based on a supplied filter, I have regional masters so I will manage groups of Puppet nodes independently

- Evenly spreads out the Puppet runs over an interval, if I have 10 nodes and a 30 minute interval I will get a run every 3 minutes.

- Nodes run at a predictable time every time, even after reboots since the node list is just run through alphabetically. If the node list stays constant you’ll always run at the same time give or take 10 seconds. If nodes get added the behavior will be predictable.

- Before scheduling a run it checks the overall concurrency of Puppet runs, if it exceeds a limit it will skip a background run. I want to give priority to runs that I run by hand with –test, this ensures that happens.

- If the client it is about to run ran its Catalog recently – maybe via –test – it will skip that run

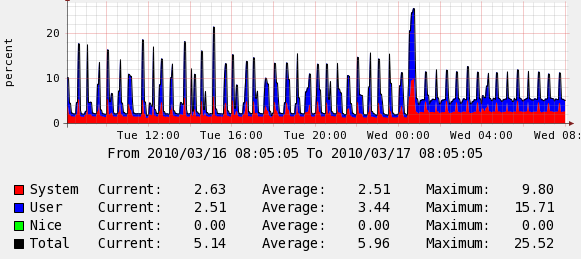

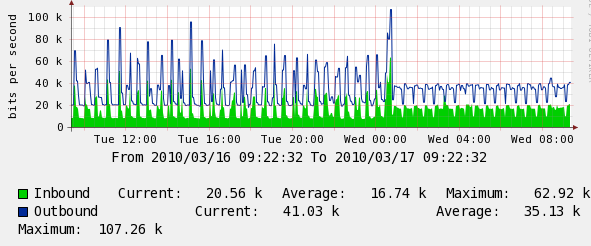

The result is pretty good, spreading 6 nodes out over 30 minutes I get a nice even CPU spread, the spike in the graph after the change is when the node itself runs Puppet. The 2nd graph is eth0 network output, the dip is when localhost is running:

The resulting CPU usage is much smoother, there aren’t periods of no CPU usage and there are no spikes caused by nodes bunching up together.

Below output from a C&C session managing 3 machines with an interval of 1 minute and a max concurrency of 1, these machines were still running cron based puppetd so you can see the C&C is not scheduling runs when it hits the concurrency limit due to cron runs:

$ puppetcommander.rb --interval 1 -W /dev_server/ --max-concurrent 1 Wed Mar 17 08:31:29 +0000 2010> Looping clients with an interval of 1 minute(s) Wed Mar 17 08:31:29 +0000 2010> Restricting to 1 concurrent puppet run(s) Wed Mar 17 08:31:31 +0000 2010> Found 3 puppet nodes, sleeping for ~20 seconds between runs Wed Mar 17 08:31:31 +0000 2010> Current puppetds running: 1 Wed Mar 17 08:31:31 +0000 2010> Puppet run for client dev1.my.net skipped due to current concurrency of 1 Wed Mar 17 08:31:31 +0000 2010> Sleeping for 20 seconds Wed Mar 17 08:31:51 +0000 2010> Current puppetds running: 1 Wed Mar 17 08:31:51 +0000 2010> Puppet run for client dev2.my.net skipped due to current concurrency of 1 Wed Mar 17 08:31:51 +0000 2010> Sleeping for 20 seconds Wed Mar 17 08:32:12 +0000 2010> Current puppetds running: 0 Wed Mar 17 08:32:12 +0000 2010> Running agent for dev3.my.net Wed Mar 17 08:32:15 +0000 2010> Sleeping for 16 seconds |

There are many advantages to this approach over some other that’s been suggested:

- No host lists to maintain, it reconfigures itself dynamically on demand.

- It doesn’t rely on some other on-master fact like signed certificates that breaks models where the CA is separate

- It doesn’t rely on stored configs that doesn’t work well at scale or on a setup with many regional masters.

- It doesn’t suffer from issues if a node isn’t available but it’s in your host lists.

- It understands the state of the entire platform and so you can control concurrency and therefore resources on your master.

- It’s easy to extend with our own logic or demands, the current version of the code is only 90 lines of Ruby including CLI options parsing.

- Concurrency control can mitigate other problems. Have a cluster of 10 nodes, don’t want your config change to restart them all at the same time, no problem. Just make sure you only run 2 a time.

In reality this means I can remove 256MB RAM from my master – since I can now run fewer puppetmasterd processes, this will save me $15/month hosting fee on this specific master, it’s small change but always good to control my platform costs.