I’ve wanted to be notified the moment Puppet changes a resource for ages, I’ve often been told this cannot be done without monkey patching nasty Puppet internals.

Those following me on Twitter have no doubt noticed my tweets complaining about the complexities in getting this done. I’ve now managed to get this done so am happy to share here.

The end result is that my previously mentioned event system is now getting messages sent the moment Puppet does anything. And I have callbacks at the event system layer so I can now instantly react to change anywhere on my network.

The end result is that my previously mentioned event system is now getting messages sent the moment Puppet does anything. And I have callbacks at the event system layer so I can now instantly react to change anywhere on my network.

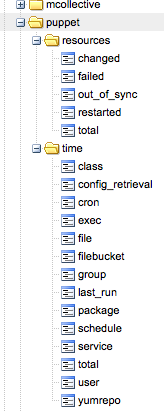

If a new webserver in a certain cluster comes up I can instantly notify the load balancer to add it – perhaps via a Puppet run of of its own. This lets me build reactive cross node orchestration. Apart from the callbacks I also get run metrics in Graphite as seen in the image.

The best way I found to make Puppet do this is by creating your own transaction reports processor that is the client side of a normal puppet report, here’s a shortened version of what I did. It’s overly complex and a pain to get working since Puppet Pluginsync still cannot sync out things like Applications.

The code overrides the finalize_report method to send the final run metrics and the add_resource_status method to publish events for changing resources. Puppet will use the add_resource_status method to add the status of a resource right after evaluating that resource. By tapping into this method we can send events to the network the moment the resource has changed.

require 'puppet/transaction/report' class Puppet::Transaction::UnimatrixReport < Puppet::Transaction::Report def initialize(kind, configuration_version=nil) super(kind, configuration_version) @um_config = YAML.load_file("/etc/puppet/unimatrix.yaml") end def um_connection @um_connection ||= Stomp::Connection.new(@um_config[:user], @um_config[:password], @um_config[:server], @um_config[:port], true) end def um_newevent event = { ... } # skeleton event, removed for brevity end def finalize_report super metrics = um_newevent sum = raw_summary # add run metrics from raw_summary to metrics, removed for brevity Timeout::timeout(2) { um_connection.publish(@um_config[:portal], metrics.to_json) } end def add_resource_status(status) super(status) if status.changed? event = um_newevent # add event status to event hash, removed for brevity Timeout::timeout(2) { um_connection.publish(@um_config[:portal], event.to_json) } end end end |

Finally as I really have no need for sending reports to my Puppet Masters I created a small Application that replace the standard agent. This application has access to the report even when reporting is disabled so it will never get saved to disk or copied to the masters.

This application sets up the report using the class above and creates a log destination that feeds the logs into it. This is more or less exactly the Puppet::Application::Agent so I retain all my normal CLI usage like –tags and –test etc.

require 'puppet/application' require 'puppet/application/agent' require 'rubygems' require 'stomp' require 'json' class Puppet::Application::Unimatrix < Puppet::Application::Agent run_mode :unimatrix def onetime unless options[:client] $stderr.puts "onetime is specified but there is no client" exit(43) return end @daemon.set_signal_traps begin require 'puppet/transaction/unimatrixreport' report = Puppet::Transaction::UnimatrixReport.new("unimatrix") # Reports get logs, so we need to make a logging destination that understands our report. Puppet::Util::Log.newdesttype :unimatrixreport do attr_reader :report match "Puppet::Transaction::UnimatrixReport" def initialize(report) @report = report end def handle(msg) @report << msg end end @agent.run(:report => report) rescue => detail puts detail.backtrace if Puppet[:trace] Puppet.err detail.to_s end if not report exit(1) elsif options[:detailed_exitcodes] then exit(report.exit_status) else exit(0) end end end |

And finally I can now create a callback in my event system this example is over simplified but the gist of it is that I am triggering a Puppet run on my machines with class roles::frontend_lb as a result of a web server starting on any machine with the class roles::frontend_web – effectively immediately causing newly created machines to get into the pool. The Puppet run is triggered via MCollective so I am using it’s discovery capabilities to run all the instances of load balancers.

add_callback(:name => "puppet_change", :type => ["archive"]) do |event| data = event["extended_data"] if data["resource_title"] && data["resource_type"] if event["name"] == "Service[httpd]" # only when its a new start, this is short for brevity you want to do some more checks if data["restarted"] == false && data["tags"].include?("roles::frontend_web") # does a puppet run via mcollective on the frontend load balancer UM::Util.run_puppet(:class_filter => "roles::frontend_lb") end end end end |

Doing this via a Puppet run demonstrates to me where the balance lie between Orchestration and CM. You still need to be able to build a new machine, and that new machine needs to be in the same state as those that were managed using the Orchestration tool. So by using MCollective to trigger Puppet runs I know I am not doing anything out of bounds of my CM system, I am simply causing it to work when I want it to work.