Some time ago I showed some sample code I had for driving MCollective with Cucumber. Today I’ll show how I did that with SimpleRPC.

Cucumber is a testing framework, it might not be the perfect fit for systems scripting but you can achieve a lot if you bend it a bit to your will. Ultimately I am building up to using it for testing, but we need to start with how to drive MCollective first.

The basic idea is that you wrote a SimpleRPC Agent for your needs like the one I showed here. The specific agent has a number of tasks it can perform:

- Install, Uninstall and Update Packages

- Query NRPE status for a specific NRPE command

- Start, Stop and Restart Services

These features are all baked into a single agent, perfect for driving from a set of Cucumber features. The sample I will show here is only driving the IPTables agent since that code is public and visible.

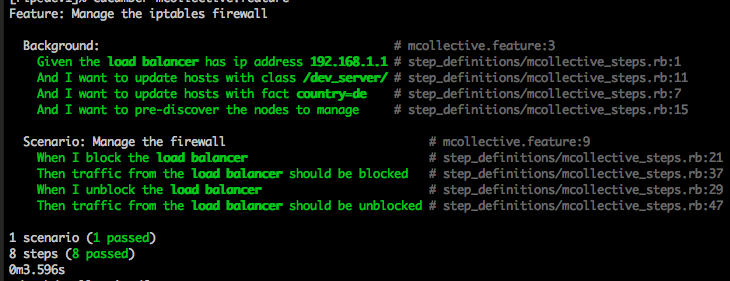

First I’ll show the feature I want to build, we’re still concerned with driving the agent here not testing so much – though the steps are tested and idempotent:

Feature: Manage the iptables firewall

Background:

Given the load balancer has ip address 192.168.1.1

And I want to update hosts with class /dev_server/

And I want to update hosts with fact country=de

And I want to pre-discover the nodes to manage

Scenario: Manage the firewall

When I block the load balancer

Then traffic from the load balancer should be blocked

# other tasks like package management, service restarts

# and monitor tasks would go here

When I unblock the load balancer

Then traffic from the load balancer should be unblocked |

To realize the above we’ll need some setup code that fires up our RPC client and manage options in a single place, we’ll place this in in support/env.rb:

require 'mcollective' World(MCollective::RPC) Before do @options = {:disctimeout => 2, :timeout => 5, :verbose => false, :filter => {"identity"=>[], "fact"=>[], "agent"=>[], "cf_class"=>[]}, :config => "etc/client.cfg"} @iptables = rpcclient("iptables", :options => @options) @iptables.progress = false end |

First we load up the MCollective code and install it into the Cucumber World, this achieves more or less what include MCollective::RPC would in a Cucumber friendly way.

We then set some sane default options and start our RPC client.

Now we can go onto writing some steps, we store these in step_definitions/mcollective_steps.rb, first we want to capture some data like the load balancer IP and filters:

Given /^the (.+) has ip address (\d+\.\d+\.\d+\.\d+)$/ do |device, ip| @ips = {} unless @ips @ips[device] = ip end Given /I want to update hosts with fact (.+)=(.+)$/ do |fact, value| @iptables.fact_filter fact, value end Given /I want to update hosts with class (.+)$/ do |klass| @iptables.class_filter klass end Given /I want to pre-discover the nodes to manage/ do @iptables.discover raise("Did not find any nodes to manage") if @iptables.discovered.size == 0 end |

Here we’re just creating a table of device names to ips and we manipulate the MCollective Filters. Finally we do a discover and we check that we are actually matching any hosts. If your filters were not matching any nodes the cucumber run would bail out.

Now we want to first do the work to block and unblock the load balancers:

When /^I block the (.+)$/ do |device| raise("Unknown device #{device}") unless @ips.include?(device) @iptables.block(:ipaddr => @ips[device]) raise("Not all nodes responded") unless @iptables.stats[:noresponsefrom].size == 0 end When /^I unblock the (.+)$/ do |device| raise("Unknown device #{device}") unless @ips.include?(device) @iptables.unblock(:ipaddr => @ips[device]) raise("Not all nodes responded") unless @iptables.stats[:noresponsefrom].size == 0 end |

We do some very basic sanity checks here, simply catching nodes that did not respond and bailing out if there are any. Key is to note that to actually manipulate firewalls on any number of machines is roughly 1 line of code.

Now that we’re able to block and unblock IPs we also need a way to confirm those tasks were 100% done:

Then /^traffic from the (.+) should be blocked$/ do |device| raise("Unknown device #{device}") unless @ips.include?(device) unblockedon = @iptables.isblocked(:ipaddr => @ips[device]).inject(0) do |c, resp| c += 1 if resp[:data][:output] =~ /is not blocked/ end raise("Not blocked on: #{unblockedon} / #{@iptables.discovered} hosts") if unblockedon raise("Not all nodes responded") unless @iptables.stats[:noresponsefrom].size == 0 end Then /^traffic from the (.+) should be unblocked$/ do |device| raise("Unknown device #{device}") unless @ips.include?(device) blockedon = @iptables.isblocked(:ipaddr => @ips[device]).inject(0) do |c, resp| c += 1 if resp[:data][:output] =~ /is blocked/ end raise("Still blocked on: #{blockedon} / #{@iptables.discovered} hosts") if blockedon raise("Not all nodes responded") unless @iptables.stats[:noresponsefrom].size == 0 end |

This code does actual verification that the clients have the IP blocked or not. This code also highlights that perhaps my iptables agent needs some refactoring, I have two if blocks that checks for the existence of a string pattern in the result, I could make the agent return Boolean in addition to human readable results. This would make using the agent easier to use from a program like this.

That’s all there is to it really, MCollective RPC makes reusing code very easy and it makes addressing networks very easy.

Monitoring / Infrastructure Testing

The above code demonstrates how using MCollective+Cucumber you can address any number of machines, perform actions and get states within a testing framework. This seems an uncomfortable fit – since Cucumber is a testing framework – but it doesn’t need to be.

Above I am using cucumber to drive actions but it would be great to use this combination to do testing of infrastructure states using something like cucumber-nagios. The great thing that MCollective brings to the table here is that you can have sets of tests that changes behavior with the environment while having the ability to break out of the single box barriers.

With this you can easily write a kind of infrastructure test that transcends machine boundaries. You could check the state of one set of variables on one set of machines, and based on the value of those go and check that other machines are in a state that makes those variables valid variables to have.

We’re able to answer those ‘this machine is doing x, did the admin remember to do y on another machine?’ style questions. Examples of this could be:

- If the backups are running, did the cron job that takes a database out of the service pool get run? This would flag up at any time, even if someone is doing a manual run of backups.

- How many Puppet Daemons is currently actively doing manifests on all our nodes, alert if more than 10. Even this simple case is hard – you need a view of the status of an application in real time across many nodes, and requires information from now rather than the usual 5 minute window of Nagios.

- If there are 10 concurrent puppetd runs happening right now, is the puppet master coping? This test would stay green, and not care for the master until the time comes that there are many puppetd’s doing manifest runs. This way if your backups or sysadmin action pushes the load up on the master the check will stay green, it will only trigger if you’re seeing many Puppet clients running. This could be useful indicators for capacity planning.

These simple cases are generally hard for systems like Nagios to do, it’s hard to track state of many checks, apply logic and then go CRITICAL if a combination of factors combine to give a failure, we can build such test cases with MCollective and Cucumber fairly easily.

The code here does not really show you how to do that per se, but what it does show is how natural and easy it is to interact with your network of hosts via MCollective and Ruby. In future I might post some more code here to show how we can build on these ideas and create test suites as described. As a example a test case for the above Puppet Master example might be:

Feature: Monitor the capacity of the Puppet Master

Background:

Given we know we can run 10 concurrent Puppet clients

And the Puppet Master load average should be below 2

Scenario: Monitor the Puppet Master capacity

When there are more than usual Puppet clients running

Then the Puppet Master should have an acceptable load average |

Running this under cucumber-nagios we’ll achieve our stated goals.

As a small post note, figuring out how many Puppet Daemons are currently running their manifests is trivial with the Puppet Agent:

p = rpcclient("puppetd") p.progress = false running = p.status.inject(0) {|c, status| c += status[:data][:running]} puts("Currently running: #{running}") |

$ ruby test.rb Currently running: 3 |